DeepSeek

What does it mean for AI going forward?

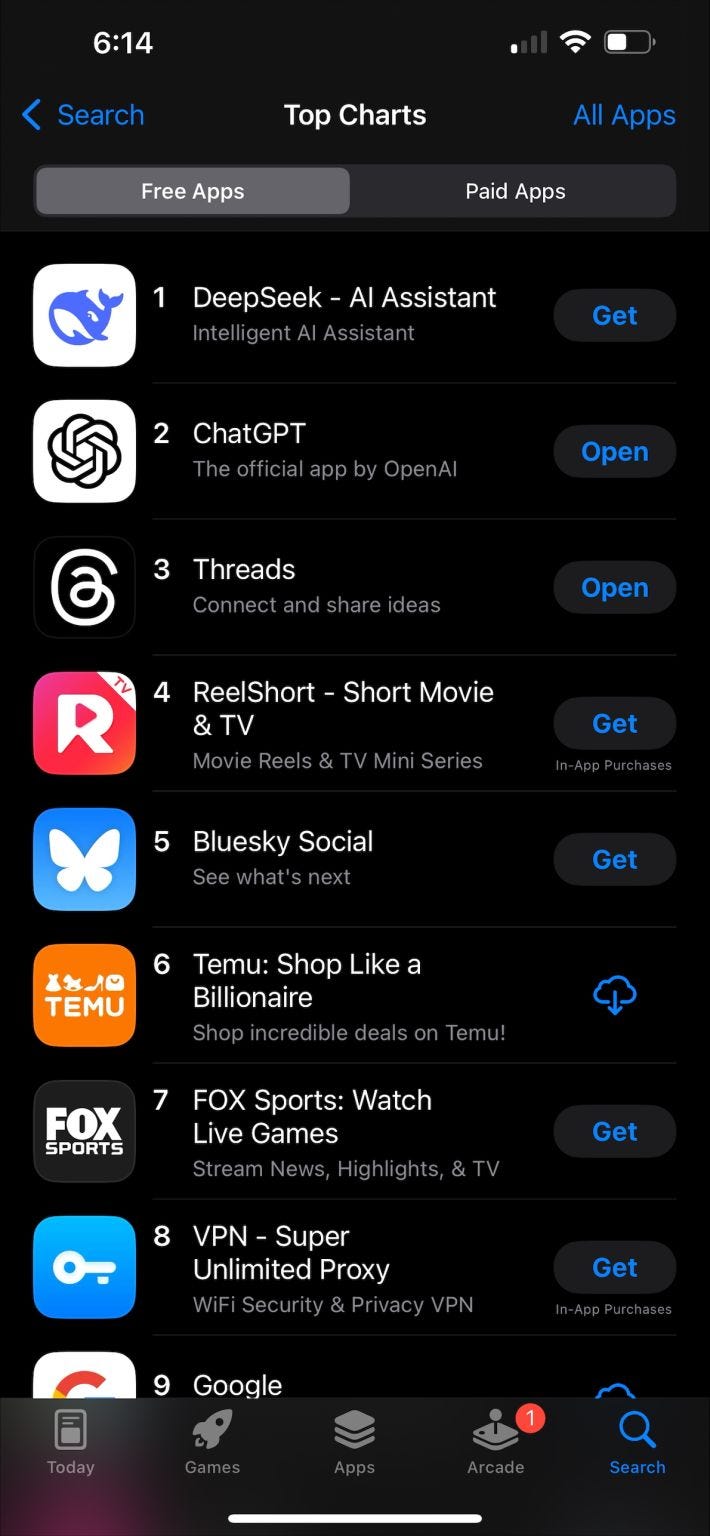

DeepSeek has now surpassed OpenAI as the most popular app on the App store... Mainstream media has positioned this as something that “came out of nowhere”. But for those closely following AI over the past 18 months, this shouldn’t have been a surprise. Chinese LLMs have always been very good. For example, Alibaba’s Qwen family of models have consistently been on top of the leaderboards. Qwen-2.5, when released in September 2024, was immediately recognized as one of the best open-source models at the time.

What has been surprising, however, is the sheer efficiency of model training DeepSeek has achieved. Deepseek v3 was supposedly trained on only 1,500 GPUs at a cost of $5.5 million—at least two orders of magnitude than its Western counterparts. They achieved this through a combination of architectural innovations including a better MoE architecture, muti-headed latent attention, and better quantization techniques (FP16->FP8), to name just a few.

Since the first U.S. chip export bans, I’ve predicted these restrictions would eventually backfire for the U.S.. By taking compute away, the restrictions have fueled greater creativity on the algorithmic and data fronts. As the saying goes, necessity is the mother of all invention.

So what could this mean for AI going forward? Here are a few thoughts:

-- Compute may be overrated, architecture may be underrated - The narrative that compute is the ultimate differentiator in AI may no longer hold as we approach scaling law limits and move towards test-time reasoning models. Instead, innovations in architecture like those pioneered by DeepSeek may play an increasingly pivotal role. In history, technologies (PCs, Phones, Software) tend to grow bigger before they get smaller. AI may now be entering its own “compression era.”

-- However, this is unlikely to have a lasting impact on chipmakers like Nvidia (which is currently down 13%+ today). The majority of compute demand is already shifting toward inference anyways, meaning smaller pretraining runs shouldn’t significantly affect overall demand for GPUs.

-- For the first time, an open-source model has achieved near parity with its close-source counterparts. History shows whenever open-source catches up to close-source, developers overwhelmingly flock to the former - with the latter rarely recovering. If this happens in AI, DeepSeek may achieve global mindshare in AI, creating a conundrum for U.S. leadership.

-- Putting geopolitical considerations aside, DeepSeek’s achievement is a big win for the global AI community. Kudos to the DeepSeek team for openly sharing their knowledge and moving the world forward. There shouldn't be an asterisk besides all of this just because the innovation came out of China. Innovation is innovation.

I'm excited to see what they'll do next.